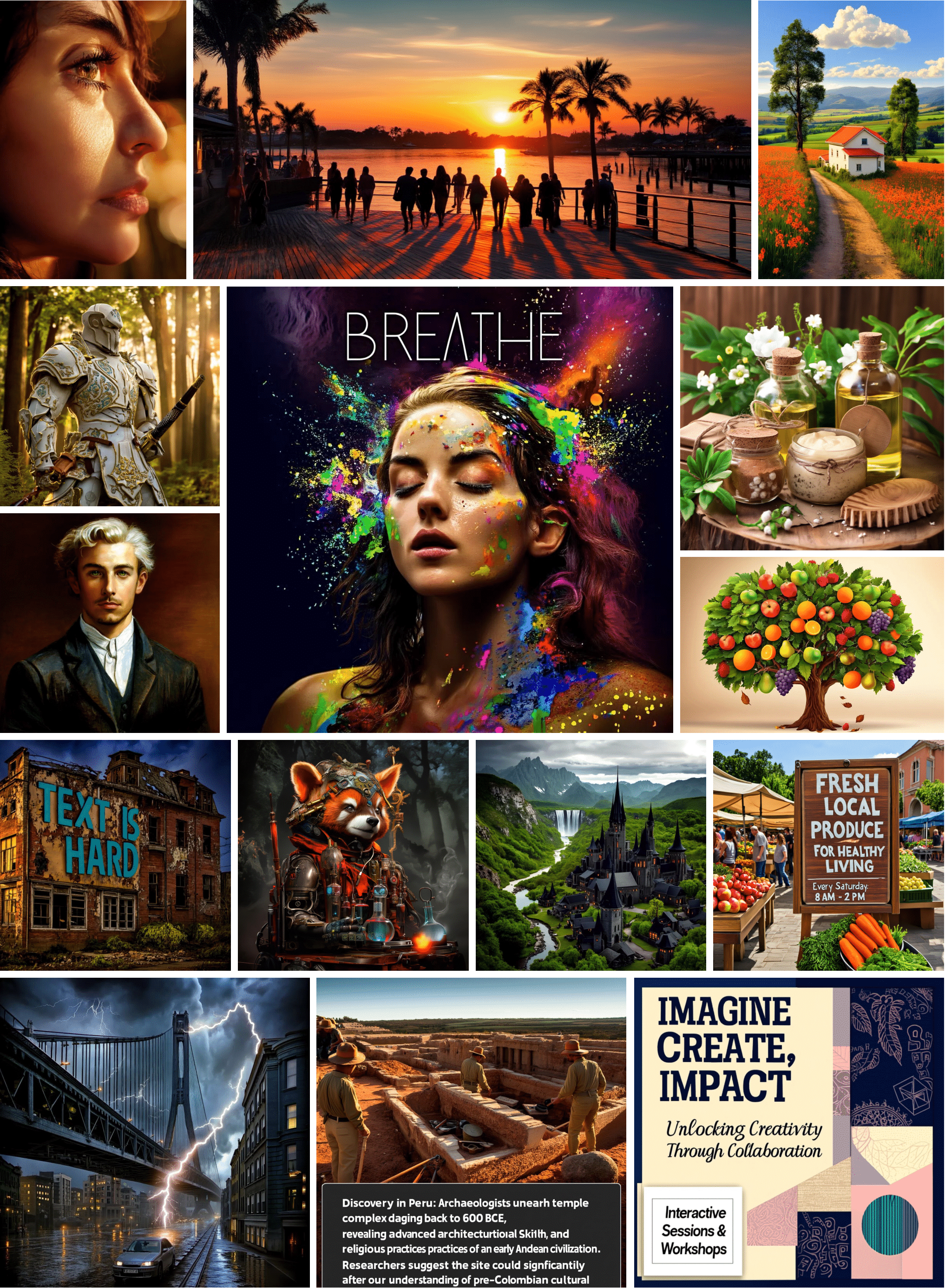

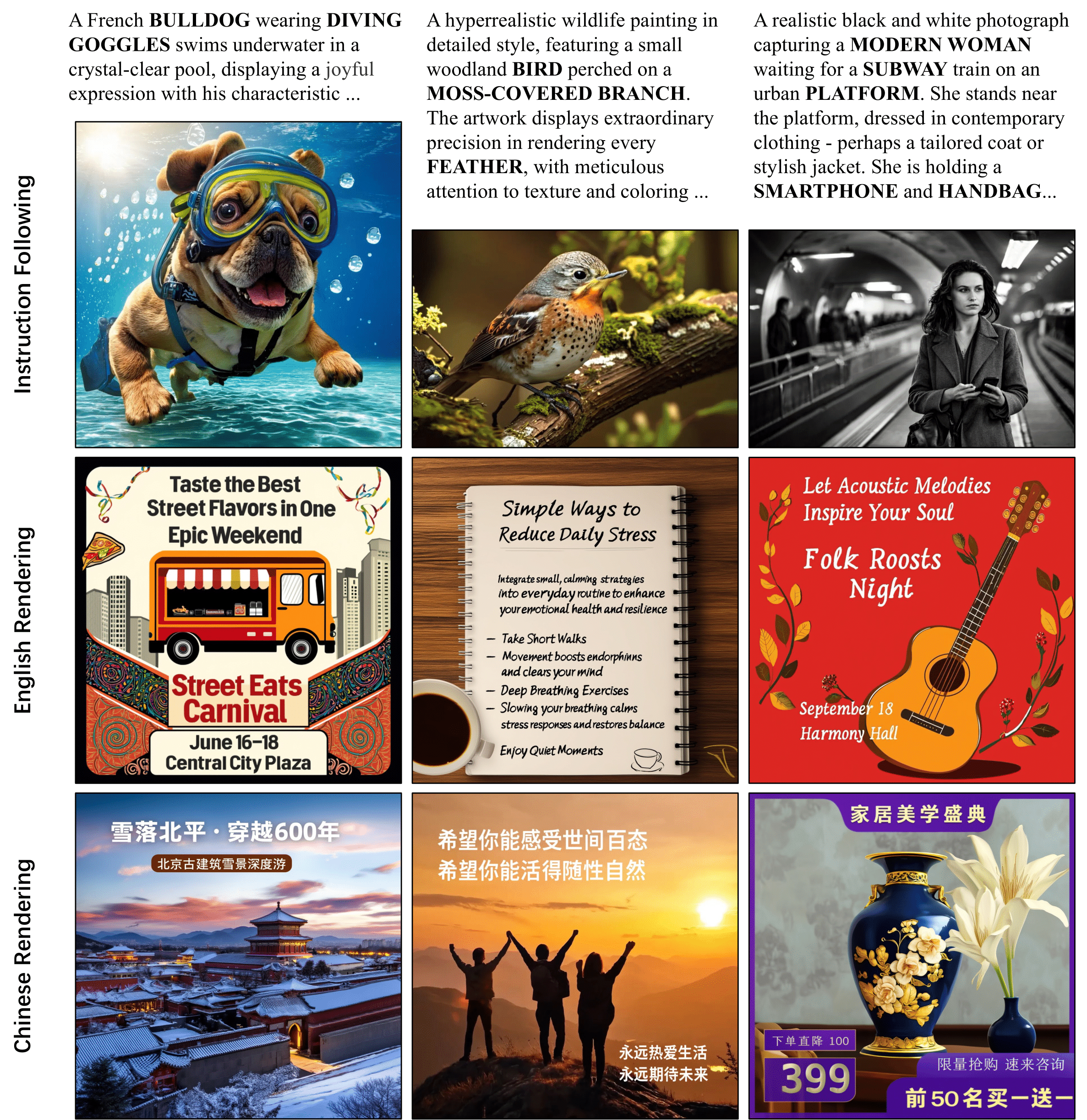

Numerous efforts have been made to extend the ``next token prediction'' paradigm to visual contents, aiming to create a unified approach for both image generation and understanding. Nevertheless, attempts to generate images through autoregressive modeling with discrete tokens have been plagued by issues such as low visual fidelity, distorted outputs, and failure to adhere to complex instructions when rendering intricate details. These shortcomings are likely attributed to cumulative errors during auto-regressive inference or information loss incurred during the discretization process. Probably due to this challenge, recent research has increasingly shifted toward jointly training image generation with diffusion objectives and language generation with auto-regressive objectives, moving away from unified modeling approaches. In this work, we demonstrate that reinforcement learning can effectively mitigate artifacts and largely enhance the generation quality of a discrete auto-regressive modeling method, thereby enabling seamless integration of image and language generation. Our framework comprises a semantic image tokenizer, a unified auto-regressive model for both language and images, and an offline diffusion decoder for image generation, termed X-Omni. X-Omni achieves state-of-the-art performance in image generation tasks using a 7B language model, producing images with high aesthetic quality while exhibiting strong capabilities in following instructions and rendering long texts.

@article{geng25xomni,

author = {Zigang Geng and

Yibing Wang and

Yeyao Ma and

Chen Li and

Yongming Rao and

Shuyang Gu and

Zhao Zhong and

Qinglin Lu and

Han Hu and

Xiaosong Zhang and

Linus and

Di Wang and

Jie Jiang},

title = {X-Omni: Reinforcement Learning Makes Discrete Autoregressive Image Generative Models Great Again},

journal = {CoRR},

volume = {abs/2507.22058},

year = {2025},

}